In this week’s edition, Wellesley Hills Financial’s Executive Advisor, Wayne Johnson III, makes the case for why Grok 4.0 seems to have taken pride of place among commercialized LLMs, diving into the architectural, computational, and data advantages of the model and company(ies) behind it.

Architectural and Computational Advantages of Grok

Grok 4.0’s strengths derive from xAI’s adherence to scaling laws emphasizing data volume, computational resources, and reinforcement learning post-pretraining. Diverging from the single-model paradigm of competitors, Grok Heavy utilizes a four-agent parallel structure: each agent conducts independent reasoning, shares insights, validates findings, and synthesizes results, decreasing error rates while improving output quality. This collective “study group” approach enables Grok to resolve complex problems, particularly in technical domains where rivals may only reach a partial solution.

xAI’s Colossus supercluster in Memphis, TN, underpins Grok 4.0’s computational advantage, comprising 150,000 NVIDIA H100 chips, 50,000 H200s, and 30,000 NVIDIA GB200 platforms, with plans to expand to one million chips. This infrastructure, built in record time, leverages priority access to innovative hardware, enabling Grok to process diverse data types, such as video and audio (vs. text only rivals) at unmatched speeds. Furthermore, the continuous influx of new data from unique sources including X’s 400 million monthly users, Tesla’s fleet of 8 million vehicles, and SpaceX serve as an information wellspring to the Grok LLM platform, feeding the constant cycle of performance improvement.

Further, xAI dedicates equal resources to pre and post-training refinement, a novel approach consistent with the “Bitter Lesson,” which posits that raw computational scale and search capacity ultimately yield more long term progress than attempts to inject handcrafted knowledge (which frequently has short term, tailored purposes and may not scale).

However, it’s not under all conditions that Grok 4.0 clearly wins. ChatGPT 5 was released on Thursday with improved results. In the words of the “unbiased observer” Elon Musk, Grok 4 Heavy still outperforms the new ChatGPT 5, but by a narrower margin, particularly as it relates to the performance of software engineering (SWE) tasks, i.e. coding – it should be noted this ought to be particular threat to Anthropic’s Claude which is well known for such tasks. Also, observers should be aware that BIG Tech (Google, Microsoft, Amazon) competition has taken note and there are multiple, multibillion projects underway with their own next generation upgrades. Google’s LLM, Gemini Pro, is due for an upgrade in the next several months and unconfirmed sources suggest their proprietary GPU – Ironwood TPU – could reach a whopping count of 1.5 million. Add approximately 500k NVIDIA planned chips and Google’s data centers may house a staggering 2 million chips at some point later this year.

Outlook: Grok 5 and Beyond

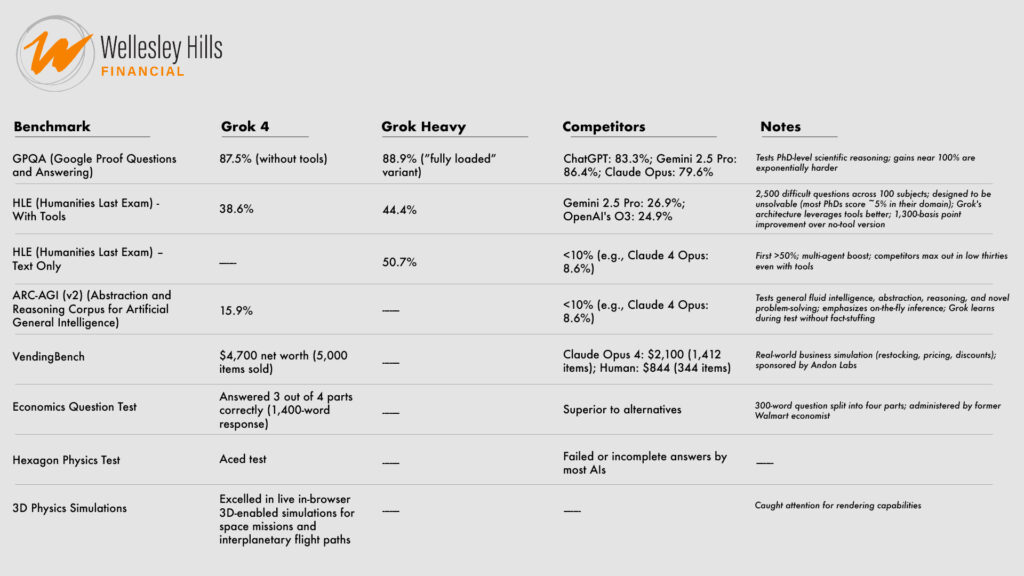

Remarkably, in less than two years since Grok’s initial v1.0 launch, its recent LLM performance benchmark has improved significantly, moving from 5th or 6th place with Grok 3.0, released in February of this year, to now, a short time later for a major LLM upgrade, a much more competitive position worldwide. This demonstrates the efficacy of a multi-agent, super-compute approach which, on the benchmarks mentioned above, may surpass larger single-model approaches.

Looking ahead, Grok 4.0 is poised to become an integral component of daily life, supported by integration into ecosystems spanning Tesla, X, SpaceX, Optimus, and Neuralink. In sum, Grok 4.0 not only delivers enhanced “cognitive” ability but also sets new standards for tool integration, scalability, and practical impact, heralding a new era for artificial intelligence across multiple sectors.

The forthcoming Grok 5.0, currently in training, will further scale agent count and introduce enhanced self-coding capabilities, broadening its applicability to areas such as interactive entertainment and molecular manufacturing.